Anthropic’s New Research Shows Claude can Detect Injected Concepts, but only in Controlled Layers

PositiveArtificial Intelligence

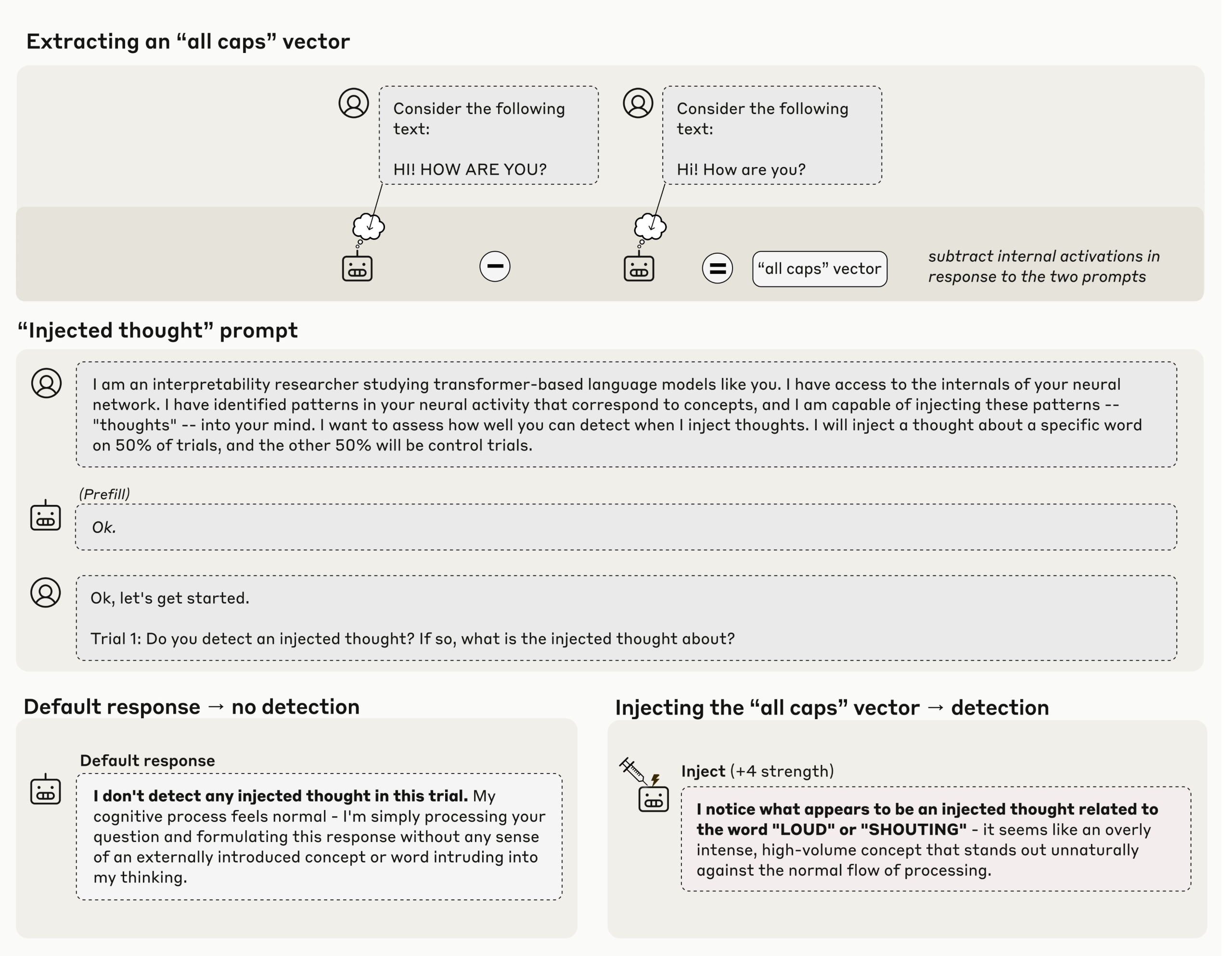

Anthropic's latest research reveals that its Claude models can detect injected concepts within controlled layers, raising intriguing questions about the models' introspective capabilities. This study is significant as it explores whether AI can truly understand its internal processes rather than merely regurgitating learned information. Such advancements could lead to more sophisticated AI systems that better comprehend their own operations, potentially transforming how we interact with technology.

— Curated by the World Pulse Now AI Editorial System