Huawei's new open source technique shrinks LLMs to make them run on less powerful, less expensive hardware

PositiveTechnology

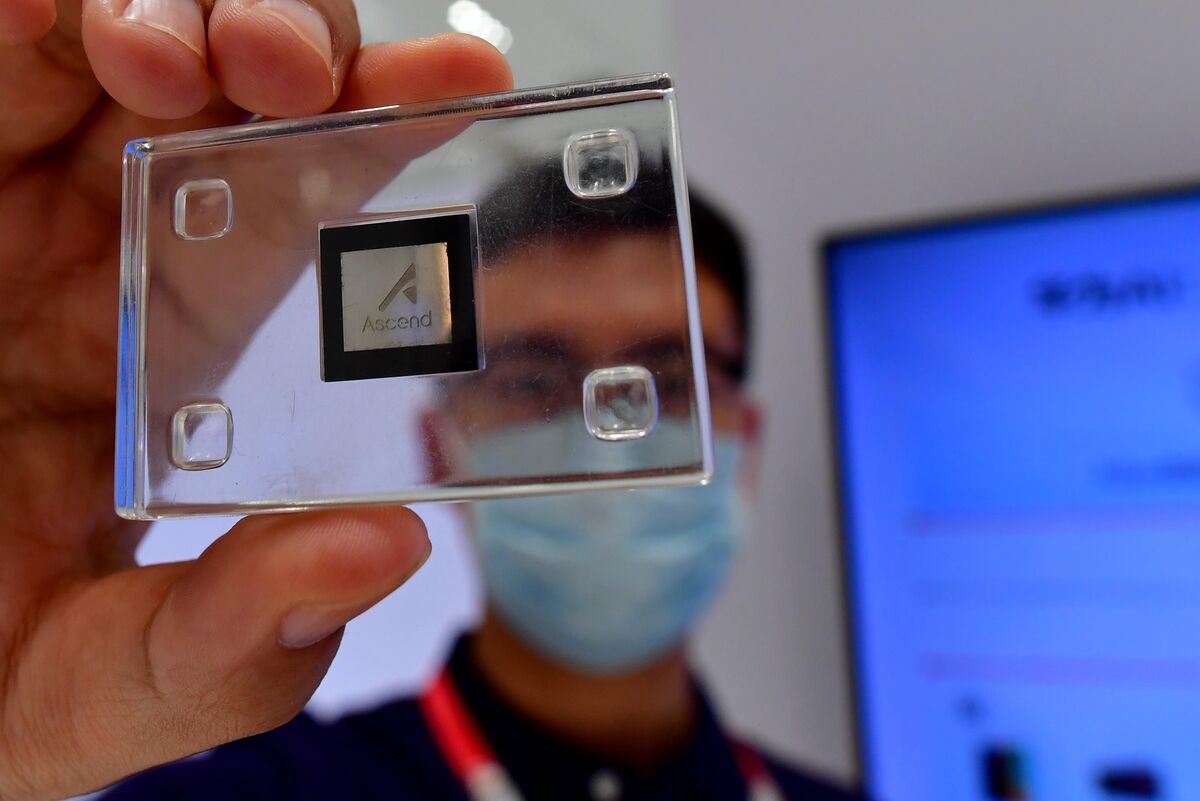

Huawei's Computing Systems Lab in Zurich has unveiled an innovative open-source technique called SINQ, which significantly reduces the memory requirements for large language models (LLMs) while maintaining their output quality. This advancement is crucial as it allows these powerful models to run on less expensive and less powerful hardware, making advanced AI technology more accessible to a wider range of users and applications. By providing the code for this technique, Huawei is not only contributing to the AI community but also paving the way for more efficient and cost-effective AI solutions.

— Curated by the World Pulse Now AI Editorial System